A/B Testing is a widely used concept in most industries nowadays, and data scientists are at the forefront of implementing it. In this article, I will explain A/B testing in-depth and how a data scientist can leverage it to suggest changes in a product. In this Article you will get to understand A/B testing on data science how data science A/B testing works and how the significance test happens and why we should use AB testing for data science.

What is A/B Testing?

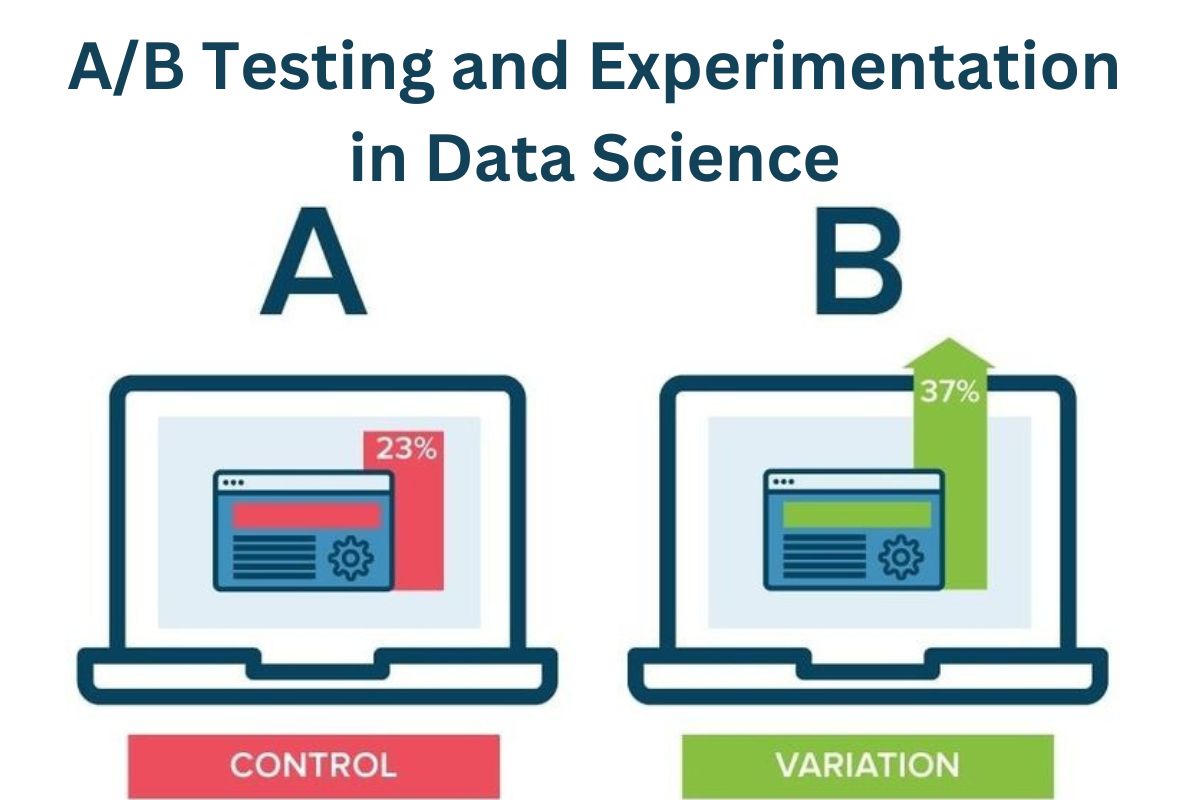

A simple randomised control experiment is A/B testing. It is a means of comparing two versions of a variable to determine which operates more effectively under controlled circumstances.

Let’s take the example of a business owner who wishes to boost product sales. You have two options in this situation: use scientific and statistical approaches or do ad hoc experiments. Among the most well-known and often utilised statistical methods is A/B testing.

You could split the products in the scenario above into A and B. In this case, A will stay the same while you significantly alter B’s packaging. Based on the feedback from the client groups who utilised A and B, respectively, you are now attempting to determine which is doing better.

It is a theoretical testing methodology that uses sample statistics to estimate population parameters for decision-making. The number of people who purchase your product is referred to as the population, whereas the number of customers who took part in the test is referred to as the sample.

How does A/B Testing Work?

The crucial query!

Let’s examine the reasoning and process underlying the idea of A/B testing in this section using an example.

Assume that XYZ is an online retailer. In an effort to boost website traffic, it want to make some adjustments to the format of its newsletter. After making certain linguistic adjustments, it designates the original newsletter as A and calls it B. Other than that, the format, colour, and headlines of both newsletters are identical.

Objective

Here, our goal is to determine which mailing increases website traffic, or conversion rate. We’ll gather information and do A/B testing to determine which newsletter works best.

Making a Hypothesis

Let’s define a hypothesis before we proceed with formulating one.

A hypothesis is an unproven theory that, if proven, would explain a certain fact or phenomenon. It is a tentative understanding of the natural world.

It is an educated estimation based on something observed in your environment. It ought to be verifiable through observation or experimentation. The hypothesis in our case could be, “We can increase website traffic by changing the newsletter’s language.”

We must formulate two hypotheses for hypothesis testing: the alternative hypothesis and the null hypothesis. Let’s examine each of them.

Null Hypothesis or H0

The theory that claims sample observations are the product of pure chance is known as the null hypothesis. The null hypothesis, as seen through the lens of an A/B test, asserts that there is no distinction between the control and variation groups. It specifies the current state of affairs, or the status quo, as the default position to be tested. “There is no difference in the conversion rate in customers receiving newsletter A and B” is our H0 in this case.

Alternative Hypothesis or H0

In essence, the alternative hypothesis is a theory that the researcher thinks is true and contradicts the null hypothesis. What you would hope to find true from your A/B test is the alternate hypothesis.

The statement “the conversion rate of newsletter B is higher than those who receive newsletter A” represents the Ha in our scenario.

In order to reject the null hypothesis, we must now gather sufficient evidence through our tests.

Create Control Group and Test Group

Selecting the client group that will take part in the test comes next, when we have our null and alternative hypotheses ready. The Control group and the Test (variant) group are the two groups we have here.

The groups that will get newsletters A and B are the Control Group and the Test Group, respectively.

A total of 1000 clients were chosen at random for this experiment, 500 for each of the Control and Test groups.

Random sampling is the process of choosing a sample at random from the population. With this method, every sample in the population has an equal probability of getting selected. In hypothesis testing, random sampling is crucial because it removes sampling bias, which is vital because you want your A/B test findings to be reflective of the overall population rather than just the sample.

The sample size is another crucial consideration that needs to be made. To prevent undercoverage bias, we must ascertain the minimal sample size for our A/B test prior to its execution. The bias stemming from a small sample size of observations.

Conduct the A/B Test and Collect the Data

Calculating the daily conversion rates for the treatment and control groups is one method of carrying out the test. As the conversion rate within a group on a given day is a single data point, the number of days is actually the sample size. As a result, during the testing period, we will compare the means of the daily conversion rates for each group.

Following a month-long trial, we found that the test group’s mean conversion rate was 19%, whereas the control group’s was 16%.

Data Science in A/B Testing

Data scientists utilise A/B testing as a basic tool to optimise and enhance many elements of products and services. In essence, two versions of something (A and B) are compared in a controlled trial to determine which performs better according to a predetermined criterion.

This is an example of how data science uses A/B testing:

Core Concept

Divide the user base or target audience into two arbitrary groups.

Present distinct iterations (A or B) of the element under examination to every group. This could be the design of a product, an advertisement, an email format, a website, etc.

Gather information about user behaviour and assess the effect of every version on a certain statistic (e.g., click-through rate, conversion rate, sales).

To find out if there is a performance difference between A and B, undertake a statistical analysis of the data.

Participation of Data Science:

At several phases of A/B testing, data scientists are essential:

Creating the Test: They assist in formulating a precise hypothesis and specifying the success metric.

The sample size required for statistical significance is ascertained by them.

Constructing the Experiment: Data scientists could create instruments to apportion people into groups at random and guarantee that variations are delivered correctly.

Data Analysis: To examine the validity of the findings and analyse the gathered data, they use statistical techniques. This entails using methods such as p-value computations and hypothesis testing to ascertain whether the observed difference is the result of random variation or a real effect.

Understanding and Suggestion:

Data scientists take statistical significance and effect size into account when interpreting the results.

They advise retaining the variant that won, improving the test, or calling the experiment to a close.

Statistical Significance of the Test

The key query at this point is: Is it possible to draw the conclusion that the test group is performing better than the control group?

The response to this is a resounding no! We must demonstrate the test’s statistical significance in order to reject our null hypothesis.

We can run across two different kinds of errors when testing our hypothesis:

Type I error: When the null hypothesis is true, we reject it. That is, when variant B does not outperform version A, we accept it.

Type II error: When the null hypothesis is untrue, we neglected to reject it. When version B performs better than variant A, we can conclude that it is not excellent.

We need to determine the test’s statistical significance in order to prevent these mistakes.

When there is sufficient data to demonstrate that the outcome observed in the sample is also present in the population, an experiment is deemed statistically significant.

This indicates that there is no error or chance explanation for the discrepancy between your control version and the test version. A two-sample T-test can be used to demonstrate the experiment’s statistical significance.

One of the most used hypothesis tests is the two-sample t-test. The purpose of its use is to compare the average difference between the two groups.

Endnotes

In summary, A/B testing is a statistical methodology that dates back at least a century, although its current form dates back to the 1990s. With the advent of big data and the internet environment, it has now gained greater prominence. It is simpler for businesses to do the test and apply the findings to enhance user performance and experience.

A/B testing can be done using a variety of tools, but as a data scientist, you need to know the underlying principles. To validate the test and demonstrate its statistical significance, you also need to be knowledgeable about the statistics.

We sincerely hope that the post has helped you better grasp data science ab testing and how it operates. You too can learn this by opting for a Best Data Science Training in Indore, Patna, Delhi, Mumbai and other Indian cities.